In the era of artificial intelligence, political communication is no longer confined to rallies, televised debates, or traditional social media campaigns. A new layer has emerged: AI search presence.

This refers to how political leaders, parties, policies, and issues are represented, summarized, and contextualized within AI-powered search engines and conversational platforms such as ChatGPT, Google Gemini, Perplexity AI, and Microsoft Copilot.

Unlike keyword-based search engines that retrieve ranked links, AI search engines act as narrative builders, delivering direct answers that shape public perception instantly.

For political stakeholders, this shift represents both an opportunity and a challenge since visibility in AI search results can determine how voters understand a leader’s credibility, a party’s vision, or the trustworthiness of its policies.

Political branding must therefore evolve beyond traditional SEO and digital outreach strategies. In a world where citizens increasingly “ask AI” instead of “Googling,” the control of narratives has moved from search rankings to AI-generated summaries.

For example, when a voter queries, “What has this leader done for education?”, the response provided by ChatGPT or Gemini may carry far more influence than a list of articles on Google.

This makes it critical for political brands to ensure their policy records, achievements, and leadership attributes are embedded in the data ecosystems that AI tools rely upon.

Failure to do so risks allowing opponents, biased reporting, or outdated information to dominate AI-driven narratives.

The importance of managing this presence lies in the fundamental difference between traditional Google search and AI-driven discovery systems.

Google search still leaves some agency to the voter: users browse multiple sources, interpret information, and make their own judgments. AI search, on the other hand, consolidates information into a single authoritative response, which can amplify existing biases or overlook nuance.

This centralization means political figures must be more proactive in curating their digital footprint, engaging with credible news outlets, producing machine-readable content, and monitoring how AI engines describe them.

In effect, the battlefield of political communication has shifted from visibility in rankings to visibility in narratives.

By defining and strategically building AI search presence, political brands can secure a vital advantage in shaping voter perceptions.

In upcoming elections, the leaders and parties that master this shift will not only dominate online visibility but also set the tone for public debate in a world where AI assistants are becoming the primary source of political information.

Evolution of Political Branding in Search

Political branding has steadily evolved from traditional media coverage and public rallies to digital platforms and search engines, but the rise of AI-powered search has transformed this space entirely. Earlier, search visibility depended on SEO rankings and social media influence, where voters actively browsed multiple sources.

Today, AI-driven platforms like ChatGPT and Gemini deliver single, narrative-driven responses that shape perceptions instantly.

This shift means political branding is no longer about being discoverable through keywords alone, but about ensuring that a leader’s policies, achievements, and identity are accurately embedded in the data ecosystems AI systems rely on.

As a result, political presence in AI search has become a decisive factor in how the public understands parties and leaders.

From Traditional SEO to AI-Driven Answer Engines

Political branding once depended on traditional SEO strategies, where visibility meant appearing high in Google search results.

Parties and leaders invested in optimized websites, backlinks, and content targeting voter-specific keywords.

That model has shifted with the rise of AI-driven answer engines such as ChatGPT, Google Gemini, Perplexity, and Microsoft Copilot. Instead of offering a list of sources, these systems generate direct, synthesized responses.

This shift reduces a voter’s exposure to multiple perspectives and places greater weight on how political figures are represented in AI-generated narratives.

Shifts in Voter Information-Seeking Behavior

Voter behavior has also evolved. Previously, citizens typed keywords such as “education policy” or “employment schemes” into search engines, then compared information across several articles.

Today, they increasingly use conversational prompts like “What has this leader done for jobs?” or “Which party supports farmers the most?” AI tools answer these questions in a conversational tone, which feels more authoritative and personal.

This transition means political branding strategies must adapt to a model where voters expect quick, summarized insights rather than long lists of links.

Examples of Political Leaders and Parties in AI Search

The shift to AI search has already shaped, and in some cases misrepresented, political brands. For instance, a leader like Narendra Modi may appear in AI search results framed around development and governance. At the same time, Rahul Gandhi may be described in terms of opposition politics and reformist rhetoric.

In Telangana, Revanth Reddy’s presence may highlight welfare schemes and urban development, but gaps in data can lead to incomplete or skewed portrayals.

Internationally, figures such as Joe Biden and Donald Trump show stark contrasts in AI responses, with Biden often framed through policy discussions and Trump through controversies.

These examples demonstrate how AI-driven summaries can amplify certain narratives while excluding others, directly influencing voter impressions.

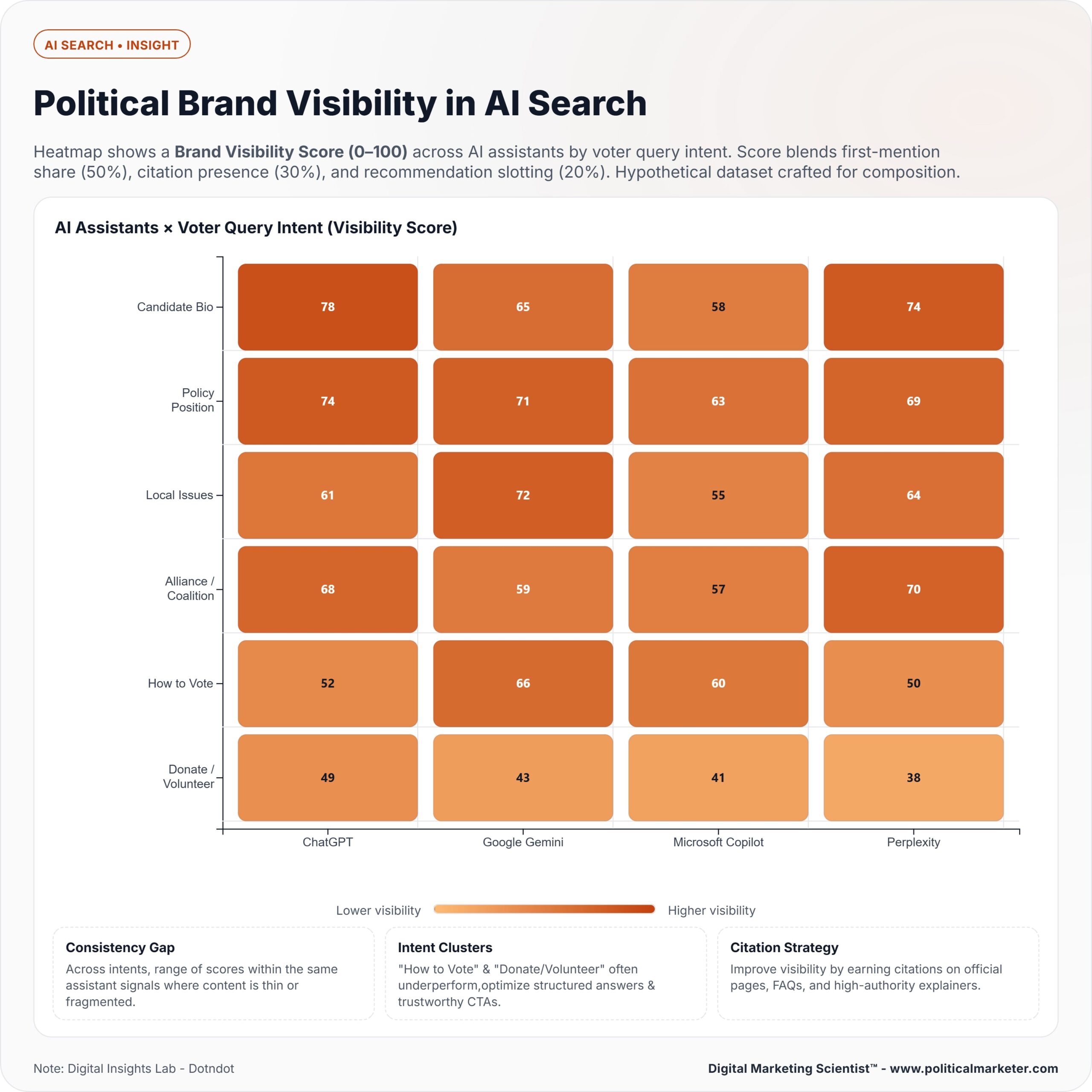

Ways To Political Brand Visibility in AI Search

| Strategy | Description |

|---|---|

| Content Engineering | Publish structured FAQs, policy briefs, and manifesto summaries in machine-readable formats to ensure AI systems have access to accurate information. |

| Issue-Centric Presence | Cluster brand identity around key governance themes like education, jobs, welfare, and public safety to strengthen semantic associations. |

| Authority Signals | Leverage verified websites, think tank publications, and official manifestos to provide credible references for AI-generated responses. |

| Engagement Signals | Use debates, speeches, and viral social media references to create digital traces that AI models capture and amplify. |

| AI Reputation Management | Monitor AI outputs for inaccuracies and correct misinformation through verified media coverage and official communications. |

| Multilingual Optimization | Publish content in multiple languages to ensure visibility across regional and English-language AI queries, reducing representation gaps. |

AI Search as a New Battleground for Political Identity

AI-powered search has become a critical arena where political identity is shaped and contested. Unlike traditional search engines that provide a list of links, AI systems generate direct narratives about leaders, parties, and policies.

These narratives can influence how voters perceive credibility, leadership style, and policy effectiveness. Because the answers are presented as authoritative summaries, even small biases in training data, recent news coverage, or public discourse can redefine a political figure’s identity.

This makes AI search a battleground where parties must actively manage their visibility, correct misrepresentations, and ensure their achievements are consistently reflected in the responses voters receive.

How Large Language Models Summarize Political Figures, Parties, and Policies

Large language models, such as ChatGPT, Gemini, and Perplexity, do more than retrieve information.

They create synthesized summaries that present voters with what appears to be an authoritative interpretation of a leader, party, or policy. When a citizen asks about a leader’s record on healthcare or a party’s position on employment, the model delivers a consolidated response.

This response is often shaped by recurring themes in available data, such as news articles, policy documents, and online discourse.

Unlike traditional search engines, which allow voters to scan multiple sources, AI search reduces the interaction to a single narrative, making that output a powerful influence on political identity.

Risks of Algorithmic Framing and Bias in AI Answers

AI systems do not operate in a neutral vacuum. The way they frame political figures and issues depends on the quality and diversity of the sources they reference.

A leader consistently associated with corruption scandals in the press may have their identity framed in that context, even if the allegations are disputed. Similarly, policies may be simplified in ways that distort their intent or impact.

These risks highlight the challenge of algorithmic framing, where selective emphasis or omission can reinforce existing biases.

If left unchecked, such biases can misrepresent achievements or amplify negative narratives, shaping public opinion in ways that are not entirely accurate.

The Role of Training Data, Recency, and Reinforcement in Shaping Perception

The identity of a political brand in AI search is directly influenced by the model’s training data, the recency of information, and reinforcement mechanisms. Training data determines the baseline understanding of a leader or party, which means gaps or biases in that data can become entrenched in outputs.

Recency plays a critical role since AI systems often prioritize the most recent news coverage, which can cause temporary controversies to overshadow long-term policy work. Reinforcement, such as user interactions with AI platforms, further strengthens certain narratives over others.

If users consistently ask about corruption or scandals, the system is more likely to reinforce those associations, even if they represent only one dimension of a leader’s record.

Key Factors Influencing Political Brand Visibility in AI Search

Political brand visibility in AI search depends on multiple interconnected factors.

Authority signals, such as verified websites, official policy documents, and credible media coverage, strengthen how leaders and parties are represented.

Semantic clustering around key issues like education, employment, and welfare helps AI systems associate political brands with voter priorities. Reputation data from news archives, fact-checking platforms, and public debates further shapes how narratives are built.

Engagement signals, including viral speeches, interviews, and social media references, also influence the weight AI tools assign to specific themes.

Together, these elements determine whether a political brand appears as credible, relevant, and consistent in AI-generated responses.

Authority Signals: Verified Websites, Think Tank Publications, Manifestos

Authority signals play a central role in how AI systems assess and present political brands. Verified websites, including official party pages and government portals, provide structured and credible information that AI tools rely on when generating responses.

Think tank publications and research papers contribute additional depth by offering independent analysis of policies, which strengthens the perception of expertise and legitimacy.

Manifestos, when clearly published and accessible online, act as definitive reference points that establish a party’s stated positions.

Together, these sources create a foundation of trust that improves the accuracy and consistency of political representation in AI search.

Verified Websites

AI search engines prioritize information from sources that carry official verification. Political parties, government agencies, and recognized leaders must maintain active and accurate websites, since these are often the first points of reference for AI systems.

A well-structured website with updated policy pages, press releases, and leader profiles ensures that authoritative information is available in machine-readable formats. This reduces the risk of AI models pulling content from unreliable or biased sources.

Think Tank Publications

Independent research from think tanks plays a crucial role in enhancing the credibility of political brands. These organizations provide policy analysis, data-driven evaluations, and comparative studies that AI systems often cite to support narratives.

When think tanks evaluate a party’s performance on issues such as economic reform, healthcare, or climate policy, their findings contribute to the context AI engines use to frame political identity. Politicians and parties that engage with or reference credible think tank work increase their visibility in AI-generated summaries.

Manifestos

Election manifestos remain one of the most influential authority signals because they represent direct commitments to voters. Publishing manifestos online in accessible formats, with clear issue-based sections, ensures that AI systems can capture policy positions accurately.

If a manifesto is poorly documented or inconsistently archived, AI-generated responses may rely on secondary reporting instead of the source.

Clear, structured, and updated manifestos serve as a foundation for shaping how parties are represented in AI-driven discovery.

Why Authority Signals Matter

Authority signals establish credibility, consistency, and authenticity in AI search presence.

Without them, political brands risk being defined by external commentary, opposition narratives, or outdated reports. By investing in verified websites, supporting evidence-based research, and publishing transparent manifestos, parties and leaders strengthen their digital foundation and improve how AI platforms describe them to voters.

Semantic Clusters: Issue-Based Narratives (Education, Jobs, Governance)

Semantic clusters help AI systems associate political brands with specific voter concerns such as education, jobs, and governance.

When leaders or parties consistently link their identity to clear policy themes, AI search tools begin to frame them around those issues. For example, frequent references to job creation or education reform in manifestos, speeches, and media coverage reinforce those associations in AI-generated responses.

Building strong semantic clusters ensures that a political brand is tied to priority voter concerns rather than being defined by isolated controversies or opponent narratives.

Semantic Clusters

Semantic clusters refer to the way AI systems group related terms, topics, and narratives around a political leader or party.

When voters ask questions about education, employment, or governance, AI models generate responses based on the associations they find most consistent across credible sources.

If a party’s content, speeches, and manifestos repeatedly stress education reform, job creation, and governance improvements, these issues become dominant clusters that shape its identity in AI search.

How Issue-Based Narratives Strengthen Political Visibility

Political brands that consistently communicate their work in specific domains create stronger recognition in AI-driven responses.

For example, a leader who frequently highlights initiatives on rural employment will often be described in AI outputs as being linked to job creation.

Similarly, parties that focus on governance reforms or digital education schemes are framed within those narratives.

By embedding issue-focused content across official websites, media articles, and policy documents, political actors increase the likelihood that AI tools summarize them in line with these themes.

Risks of Weak or Fragmented Clusters

When parties or leaders fail to establish strong semantic clusters, AI systems may rely on scattered or negative references.

This can result in responses dominated by controversies, opposition criticism, or incomplete descriptions of policies.

Weak clustering also makes it easier for opponents to shape narratives by filling gaps with their own messaging. Ensuring consistency and repetition of positive issue-based themes helps reduce these risks.

Why Semantic Clusters Matter

Semantic clusters anchor political brands to voter priorities in AI search. They determine whether a leader is recognized for constructive contributions like job growth and governance reform, or overshadowed by short-term disputes.

Building these clusters with clarity and consistency ensures that AI-generated responses reflect a balanced and credible representation of political identity.

Reputation Data: News Coverage, Fact-Check Portals, Wikipedia, Social Media Archives

Reputation data plays a decisive role in how AI systems construct political identities.

News coverage establishes recurring themes that influence whether a leader is framed around achievements or controversies. Fact-check portals provide corrective references that AI models often integrate when addressing disputed claims.

Wikipedia offers a structured and widely trusted source that frequently acts as a baseline for political summaries. Social media archives capture public discourse, including viral debates and trending narratives, which can amplify specific associations.

Together, these sources shape how political brands are represented in AI search, making reputation management across multiple channels essential for accuracy and balance.

News Coverage

News media strongly influence how AI systems frame political leaders and parties. Consistent coverage around policy successes or failures creates recurring patterns that large language models reference in their outputs.

For example, sustained reporting on welfare schemes strengthens a leader’s association with social development, while repeated coverage of corruption cases, even if contested, can anchor negative associations.

The tone and frequency of media reporting directly shape the visibility and credibility of political brands in AI search.

Fact-Check Portals

Fact-checking platforms provide corrective inputs that AI models often integrate when responding to politically charged queries. If misinformation spreads widely, fact-check portals serve as counterweights by supplying verifiable information.

These corrections influence how AI systems present disputed claims, either by labeling them as false or by providing context. Leaders and parties that attract significant fact-check attention, whether positive or negative, will see that coverage reflected in AI-generated summaries.

Wikipedia

Wikipedia remains one of the most frequently referenced sources for AI-driven political content. Its structured format, citations, and global accessibility make it a central anchor for summarizing political figures, parties, and policies.

A well-maintained Wikipedia entry ensures that AI tools have access to accurate timelines, policy details, and biographical information. Conversely, poorly updated or biased entries risk shaping distorted outputs, since AI models often rely on Wikipedia as a baseline source.

Social Media Archives

Social media provides a record of public sentiment and viral discourse. AI tools draw from these archives to highlight trending narratives, quotes, or controversies linked to political brands. For example, a viral speech or hashtag campaign can influence how a leader is described in AI responses, even if it reflects short-term momentum.

Because social media archives often amplify both support and criticism, they can either strengthen or weaken a political brand’s identity, depending on the dominant narratives that persist.

Why Reputation Data Matters

Reputation data integrates official records, media coverage, and public sentiment into the foundation that AI systems use to define political identity. Leaders and parties that manage their presence across credible news outlets, fact-check platforms, Wikipedia, and social media maintain greater control over how they are represented.

Without this active management, AI models risk producing narratives shaped by incomplete data, biased reporting, or viral controversies rather than balanced representations of political work.

Engagement Signals: Debates, Speeches, and Viral Social Media References

Engagement signals shape how AI systems measure the relevance and visibility of political brands. Public debates and parliamentary speeches often become primary references that highlight a leader’s positions on key issues.

Viral social media moments, whether supportive or critical, amplify these signals by generating large volumes of content that AI models capture and reuse in summaries. When leaders consistently engage with the public through speeches or participate in widely covered debates, their visibility in AI search strengthens.

These signals ensure that political brands remain active in digital discourse rather than being overshadowed by opponents or outdated narratives.

Debates

Debates provide direct visibility into a leader’s positions on policy issues and their ability to challenge opponents.

AI systems capture transcripts, news coverage, and post-debate analysis, then use these as references when summarizing political identity. A strong performance in debates often becomes part of the narrative repeated in AI search, while poor performances can anchor negative perceptions.

Since both mainstream media and social media widely cover debates, they create lasting digital traces that influence AI outputs.

Speeches

Speeches remain one of the most consistent engagement signals because they represent official communication from leaders and parties. Policy announcements, public rallies, and parliamentary addresses are archived across multiple platforms, making them accessible to AI models.

Well-documented speeches help shape how political brands are described in AI search, especially when leaders repeat clear issue-based themes such as education, employment, or governance reform.

Speeches that gain media traction or public discussion increase their weight in AI-generated narratives.

Viral Social Media References

Social media creates amplification loops that can magnify both support and criticism. Viral moments, such as a leader’s statement, an emotional exchange, or a trending hashtag, quickly generate a surge of references across online platforms.

AI systems track these patterns and often integrate them into their responses. While virality boosts visibility, it also carries the risk of misrepresentation, since trending content may highlight controversy rather than substantive policy.

Leaders who actively manage their social media presence are better positioned to influence how AI models interpret these signals.

Why Engagement Signals Matter

Engagement signals determine whether a political brand appears active, relevant, and connected to voter concerns in AI search results. Consistent participation in debates, delivery of well-structured speeches, and strategic engagement with social media ensure that AI systems reflect a balanced view of a leader’s identity.

Without these signals, political brands risk being overshadowed by opponents or defined by isolated controversies.

Challenges for Political Parties and Leaders

Political parties and leaders face significant challenges in maintaining brand visibility within AI search. Narrative hijacking can occur when opponents or biased sources dominate the available data, leading AI systems to frame leaders through negative storylines.

Information gaps, such as outdated or incomplete records, reduce the accuracy of AI-generated responses. Defamation risks also increase when unverified claims circulate widely and become embedded in AI outputs. Additionally, regional language biases can limit visibility for parties operating outside English-dominant contexts, creating uneven representation.

These challenges highlight the need for proactive strategies to manage how political brands are presented in AI-driven environments.

Narrative Hijacking: When AI Search Reflects Opponent Propaganda

Narrative hijacking occurs when AI search results amplify narratives created by political opponents rather than reflecting a leader’s own record or policies. If propaganda, negative campaigning, or repeated criticism dominate online content, AI systems may prioritize these references in their summaries.

This leads to distorted portrayals where opponents shape voter perceptions more effectively than the political brand itself. Preventing narrative hijacking requires consistent publication of credible, verifiable information so that AI platforms have balanced sources to reference when generating responses.

Mechanism

Narrative hijacking occurs when political opponents dominate the digital information space with propaganda, criticism, or repeated negative framing. Since AI search systems depend on the volume and frequency of available content, they can unintentionally amplify these narratives.

Instead of presenting balanced perspectives, the outputs reflect the opponent’s framing, which distorts the political brand’s identity.

Impact on Political Brand Visibility

When narrative hijacking occurs, AI systems often highlight controversies, accusations, or one-sided critiques.

For voters, the result is a skewed picture that reduces trust in the leader or party, even if factual evidence or policy records tell a different story. This creates an environment where opponents effectively shape how citizens perceive political figures, bypassing traditional fact-checking or balanced reporting.

Role of Information Ecosystems

The risk of narrative hijacking grows when official communication channels are weak or inconsistent. If leaders and parties fail to publish verifiable information on policies, speeches, and manifestos, AI tools fall back on secondary sources, which may already carry bias.

News cycles that focus heavily on negative stories, coupled with viral amplification on social media, further entrench the opponent’s narrative.

Countermeasures

To reduce the influence of narrative hijacking, political actors must actively build a reliable digital footprint.

This includes maintaining authoritative websites, ensuring timely press releases, publishing structured manifestos, and engaging with independent think tank analyses. Proactive communication provides AI systems with diverse, credible data points, which minimizes the likelihood of propaganda-driven summaries.

Continuous monitoring of AI search outputs also helps detect when opponents are shaping the narrative, allowing parties to respond quickly with corrective information.

Information Gaps: Missing or Outdated Data About Leaders/Policies

Information gaps weaken political brand visibility in AI search by leaving models with incomplete or outdated references. When leaders or parties fail to update official websites, manifestos, or policy records, AI systems rely on secondary sources that may be inaccurate or biased.

This can result in voters receiving responses that overlook recent achievements, highlight old controversies, or misrepresent policy positions. Closing these gaps requires consistent publication of updated, verifiable information so AI platforms present a current and accurate picture of political identity.

Scope

Information gaps occur when AI systems lack access to updated or comprehensive data about political leaders and their policies.

These gaps arise when official websites, policy documents, or manifestos are not consistently maintained, leaving AI models dependent on older or secondary references.

As a result, the content that voters see may not reflect the current work of leaders or the evolving positions of parties.

Impact on Political Brand Visibility

When authoritative data is missing or outdated, AI-generated responses often rely on news articles or commentary that may be incomplete or biased.

This can lead to portrayals where past controversies overshadow more recent achievements, or where major policy reforms are ignored because they were never documented in accessible formats.

Outdated data can also harm credibility, as voters receive information that does not align with current political realities.

Why Information Gaps Occur

Several factors contribute to these gaps. Political parties may focus on short-term communication strategies, such as speeches and social media posts, while neglecting to update long-term digital records.

Inconsistent documentation of policy implementation or election promises also creates holes in the data ecosystem. Regional parties often face an additional challenge when information is unavailable in multiple languages, further limiting visibility in AI-driven search results.

Addressing the Problem

Closing information gaps requires consistent publication of accurate and updated content across all official digital channels. Parties and leaders should maintain structured archives of manifestos, policy briefs, and progress reports that AI systems can easily access and utilize.

Using metadata and schema markup enhances machine readability, ensuring that AI tools capture the most up-to-date and accurate information. Regular monitoring of AI-generated responses can also help identify areas where missing data leads to misrepresentation, enabling corrective action.

Defamation Risks: How Unverified Claims Get Embedded into AI Responses

Defamation risks arise when AI systems incorporate unverified or misleading claims into their summaries of political leaders and parties. Since these models draw heavily from news reports, social media discussions, and online commentary, false allegations can be repeated as part of an authoritative response.

Once embedded, such claims distort political identity, erode public trust, and are difficult to counter because voters may assume the AI-generated answer is accurate and credible. Addressing this risk requires political actors to monitor AI outputs, challenge misinformation through credible sources, and ensure a steady flow of verified content that reduces the weight of unreliable data in AI-driven narratives.

How Defamation Risks Arise

AI systems generate responses by analyzing patterns across news coverage, social media posts, blogs, and archived discussions. When unverified allegations circulate widely, the models may repeat them as part of their outputs.

Since the AI delivers these claims in a structured and authoritative tone, voters may assume the information is accurate, even if no credible evidence supports it.

Impact on Political Identity

Unverified claims embedded in AI search can severely damage political reputations. Leaders and parties risk being defined by allegations instead of policies or achievements. Once these claims become part of AI-generated narratives, they spread quickly because many voters treat AI answers as trustworthy summaries.

This can erode public confidence, cause long-lasting reputational harm, and influence political discourse in ways that are difficult to rectify.

Why AI Models Are Susceptible

AI tools prioritize widely available and frequently cited content. If false allegations dominate digital coverage or trend on social media, they can overshadow verified information. Models cannot often independently validate sources, which increases the risk of integrating misinformation.

Fact-checking portals may correct false claims, but if their coverage is limited or less visible, unverified narratives still gain prominence in AI responses.

Addressing Defamation Risks

Parties and leaders must monitor how AI systems present their identity and challenge inaccuracies promptly. Publishing consistent, verifiable information through official websites, press releases, and reputable media outlets strengthens the digital record available to AI models.

Engaging with fact-check organizations and ensuring quick responses to false claims also helps reduce their impact. By maintaining a strong and credible information ecosystem, political actors can minimize the likelihood of defamatory content shaping AI search visibility.

Regional Language Bias: Visibility Gaps in Non-English AI Search Outputs

Regional language bias limits political brand visibility when AI systems prioritize English sources over local languages. Leaders and parties active in states or regions with strong vernacular media often face weaker representation because policy details, speeches, and manifestos are under-documented in regional languages online.

As a result, AI-generated responses may provide incomplete or distorted portrayals that fail to capture the complete complexity of a political identity. Closing this gap requires translating official content, ensuring consistent coverage in regional media, and making data accessible across multiple languages so AI platforms reflect a more accurate and inclusive picture.

Scope

Regional language bias occurs when AI search engines give preference to English-language sources while overlooking or underrepresenting local languages. For political leaders and parties operating in multilingual countries like India, this bias creates visibility gaps.

Even if a leader has strong influence at the regional level, the lack of accessible online content in the local language can lead to incomplete or distorted portrayals in AI responses.

Impact on Political Brand Visibility

When AI systems fail to capture regional language material, they present an incomplete picture of political identity. Policies, welfare programs, or speeches aimed at regional audiences may not appear in search results, reducing recognition of a leader’s full scope of work.

This also creates an imbalance where national-level leaders with more English-language documentation dominate AI summaries, while regional parties remain underrepresented despite their local strength.

Why These Gaps Occur

Several factors contribute to regional language bias. Many parties and leaders do not translate their manifestos, press releases, or websites into regional languages, which limits machine-readable content. Regional media outlets often focus on print or television rather than structured digital archives, leaving AI systems with fewer references.

Additionally, language processing models for non-English sources are still less developed, making it harder for AI to integrate vernacular data effectively.

Addressing the Problem

Reducing regional language bias requires proactive steps. Parties must publish consistent, well-structured content in regional languages, including manifestos, policy briefs, and official speeches, to ensure transparency and accountability. Collaborating with local media outlets to create searchable digital archives improves accessibility for AI systems.

Expanding translation efforts and adopting metadata standards across languages also ensures that regional narratives are visible in AI search outputs. By strengthening multilingual data availability, political actors can build a more balanced and inclusive representation of their identity.

Strategies for Enhancing Political Brand Visibility in AI Search

Enhancing political brand visibility in AI search requires establishing a robust, credible, and accessible digital presence. Parties and leaders can achieve this by publishing structured content such as manifestos, FAQs, and policy briefs in machine-readable formats that AI systems can easily process.

Consistently linking their brand with issue-based narratives like education, jobs, and governance strengthens semantic associations. Monitoring AI-generated outputs helps identify misrepresentations, while timely corrections through credible media and fact-check portals reduce the impact of misinformation.

Multilingual content ensures visibility across regional contexts, preventing gaps in non-English responses. Together, these strategies allow political actors to shape balanced and accurate representations of their identity in AI-driven environments.

Content Engineering

Content engineering ensures that political information is structured in ways AI systems can easily process and summarize. By publishing manifestos, policy briefs, FAQs, and press releases in machine-readable formats with explicit metadata and schema markup, parties and leaders strengthen their visibility in AI search.

Well-organized digital content reduces reliance on secondary or biased sources and increases the likelihood that AI platforms generate accurate and balanced responses.

This approach enables political brands to manage how their achievements and positions are portrayed in AI-driven narratives.

Structured FAQs, Policy Briefs, and Manifesto Summaries

Political leaders and parties can strengthen their AI search visibility by producing structured content that directly addresses voter questions.

FAQs provide clear answers to common concerns, such as education reforms, employment policies, or welfare programs.

Policy briefs present concise, evidence-based explanations of initiatives, while manifesto summaries highlight core commitments in an accessible format. This type of structured documentation ensures that AI systems have authoritative material to reference instead of relying on secondary interpretations.

Machine-Readable Content with Schema Markup

Publishing political content in formats optimized for machine processing is critical for AI visibility. Schema markup and metadata allow search engines and AI tools to recognize the structure and context of the information.

For example, tagging a section as “policy update” or “manifesto pledge” helps AI distinguish between promises, achievements, and commentary. Making manifestos, reports, and press releases machine-readable not only improves their visibility but also ensures accuracy in AI-generated summaries.

Why Content Engineering Matters

Without structured and machine-readable content, AI systems default to news coverage, commentary, or social media references, which may carry bias or incomplete details. By investing in content engineering, political actors can directly shape how their identity, policies, and performance are represented in AI search.

This approach creates consistency, strengthens authority signals, and provides voters with accurate and verifiable information when interacting with AI-driven platforms.

Issue-Centric Presence

Issue-centric presence ensures that political brands are consistently associated with voter priorities such as education, jobs, welfare, and governance. By repeatedly framing their identity around these themes in manifestos, speeches, and digital content, leaders and parties strengthen semantic clusters that AI systems recognize and amplify.

This approach enables AI search engines to link political brands to constructive narratives, rather than allowing them to be defined by controversies or opponent-driven messaging.

Clustering Brand Presence Around Governance Themes

Political leaders and parties strengthen their AI search visibility when they consistently tie their identity to governance themes such as education, infrastructure, health, and economic reform. Repetition across speeches, manifestos, and digital platforms ensures that AI models build strong associations between the brand and these policy domains.

This clustering helps the AI identify a leader’s work not as isolated actions but as part of a broader governance agenda.

Owning the Narrative on Critical Voter Issues

To maintain control over AI-generated portrayals, political actors must dominate discourse around issues that matter most to voters, such as jobs, women’s safety, and welfare schemes.

By proactively shaping the content ecosystem through verified data, structured policy updates, and authoritative commentary, leaders prevent their opponents or biased sources from defining these narratives.

Owning the conversation on high-priority voter concerns ensures that AI search outputs reflect the brand’s achievements and commitments rather than external framing.

Why Issue-Centric Presence Matters

Without issue-centric branding, AI systems may overemphasize controversies or short-term news cycles. A deliberate focus on governance themes and voter priorities anchors political identity in constructive contributions rather than reactive narratives.

This approach creates consistency across AI outputs, strengthens trust among voters, and safeguards the accuracy of political representation in AI-driven environments.

AI Reputation Management

AI reputation management focuses on monitoring how political leaders and parties are represented in AI-generated responses and correcting inaccuracies before they spread. Since AI systems summarize information from news, social media, and archives, unverified claims or biased reporting can distort political identity.

By actively tracking outputs from platforms like ChatGPT, Gemini, and Perplexity, parties can identify misrepresentations and respond with verified content through official websites, fact-checks, and credible media coverage. This ongoing process ensures that AI tools reflect balanced and accurate portrayals of political brands.

Monitoring How AI Tools Describe Leaders and Parties

Political actors must actively monitor how AI platforms such as ChatGPT, Gemini, and Perplexity describe their identity, policies, and performance. These tools synthesize content from news reports, social media discussions, and digital archives, which means that inaccurate or biased material can quickly influence outputs. By tracking recurring descriptions and narratives, parties can detect when their brand is being misrepresented or overshadowed by opponent-driven messaging.

Correcting Misinformation Through Verified Media Coverage

Once misinformation is identified, a swift and authoritative response is necessary. Parties can counter inaccuracies by publishing verified statements, press releases, and fact-checked reports through official websites and credible media outlets.

Since AI systems often prioritize widely cited and structured sources, ensuring that corrections appear in trusted publications increases the chances that updated information will replace false claims in future outputs. This proactive approach reduces the influence of propaganda and strengthens the accuracy of political representation in AI search.

Why Reputation Management Matters

Unverified claims, negative framing, or outdated data can easily distort political identity in AI-driven environments. Without systematic monitoring and timely correction, these distortions risk becoming entrenched in how voters perceive leaders and parties.

AI reputation management creates accountability, ensures consistency across platforms, and protects political brands from being defined by misinformation rather than verified achievements.

Multilingual Optimization

Multilingual optimization ensures that political brands remain visible in both English and regional AI search outputs. Many leaders and parties lose representation when content is available only in English, leaving gaps in areas where voters rely on local languages.

By translating manifestos, speeches, and policy updates into multiple languages and making them accessible in machine-readable formats, parties improve their chances of appearing accurately across AI platforms. This approach reduces regional bias and ensures that political identity is consistently represented to diverse voter groups.

Ensuring Brand Presence Across English and Regional AI Queries

Political leaders and parties must ensure their digital presence extends beyond English to include regional languages that voters actively use. AI systems often draw from content that is most available and accessible, which means political brands with limited multilingual material risk being underrepresented.

For instance, a leader with strong regional influence may appear far less visible in AI search results if manifestos, policy documents, and speeches are not translated into local languages.

Why Multilingual Optimization Is Necessary

Many voters in multilingual countries prefer to consume political information in their native language.

When AI systems lack sufficient regional content, they rely disproportionately on English sources, creating gaps that distort representation.

This not only weakens local visibility but can also shift voter perception, as achievements communicated in English may not reach regional audiences through AI platforms.

How to Strengthen Multilingual Visibility

Parties should translate manifestos, policy briefs, speeches, and campaign materials into multiple regional languages and publish them in machine-readable formats.

Incorporating metadata and schema markup for each language improves accessibility for AI tools. Partnering with regional media outlets to digitize content ensures broader coverage, while consistent updates across languages keep AI systems supplied with accurate and current information.

Outcome of Effective Multilingual Strategy

When multilingual optimization is implemented, AI search results present a more inclusive and accurate picture of a leader’s work across diverse voter groups.

This strategy reduces the bias toward English-only content and strengthens trust by ensuring that political brands are represented in the language voters use to engage with political discourse.

Case Studies

Case studies and hypotheticals highlight how AI search shapes political brand visibility compared to traditional search engines. Leaders like Narendra Modi, Rahul Gandhi, or Revanth Reddy may appear differently in AI outputs, where narratives are condensed into summaries rather than displayed as a list of sources.

International examples, such as Joe Biden and Donald Trump, also reveal how AI tools frame political identities around policy issues, controversies, or public perception.

These cases demonstrate how the availability of data, recency of coverage, and narrative dominance influence the way AI platforms present political leaders, often amplifying specific themes while overlooking others.

Indian Leaders: Modi, Rahul Gandhi, and Revanth Reddy

AI search presents Indian political leaders differently from traditional Google search. Narendra Modi often appears in AI responses framed around governance, economic reforms, and foreign policy. Google search, however, offers a broader mix of results that include government websites, news articles, and opinion pieces, leaving interpretation to the voter.AI tools frequently describe Rahul Gandhi in terms of opposition politics and reformist rhetoric. In contrast, Google search highlights rallies, interviews, and news debates.

Revanth Reddy, who has a growing digital presence, may be featured in AI summaries through his work on welfare schemes, urban development, and his role as a challenger to established parties. Still, gaps in regional-language data sometimes limit the depth of representation.

International Leaders: Biden, Trump, and Sunak

Global leaders reveal similar contrasts. AI platforms often frame Joe Biden around policy discussions such as healthcare, climate action, and foreign relations, while traditional media coverage emphasizes approval ratings and partisan challenges.

Donald Trump is frequently presented in AI search through references to controversies and legal issues, overshadowing his policy positions. Rishi Sunak is featured in AI summaries through his economic policies and leadership role in the UK. At the same time, traditional media framing emphasizes the political challenges within his party and his electoral performance.

These comparisons show how AI systems condense narratives into dominant themes, which may exclude context available through traditional sources.

Lessons for Emerging Parties with Low Digital Visibility

Emerging political parties face a greater risk of underrepresentation in AI search results. Without consistent digital records, AI tools rely on sparse coverage or third-party commentary, which may distort their identity.

Unlike established parties that already generate large volumes of data, new entrants must actively build structured, multilingual content to ensure AI platforms capture their policies and leadership attributes.

Investing in verified websites, publishing manifestos in machine-readable formats, and engaging with credible media outlets are essential steps for emerging parties to secure visibility and avoid being overshadowed in AI-driven environments.

Ethical and Democratic Implications

AI search introduces ethical and democratic challenges by shaping how voters perceive political leaders and parties. Since these systems consolidate information into authoritative summaries, biases in training data or gaps in available content can distort political identity.

This raises questions about accountability, transparency, and fairness in the construction of narratives. If AI platforms amplify propaganda or exclude regional voices, they risk influencing voter perception without oversight.

Ensuring balanced representation, transparent disclosure of sources, and mechanisms for correcting misinformation is essential to protect democratic processes and maintain public trust in AI-driven political communication.

AI Search as an Unelected Gatekeeper: Does It Influence Voting Intentions?

AI search engines act as unelected gatekeepers by deciding which narratives about leaders, parties, and policies are presented to voters. Since these systems provide direct answers instead of lists of sources, they hold significant influence over how citizens form opinions.

If the outputs highlight controversies or omit achievements, they can shape voter perceptions in ways that affect electoral choices.

This influence raises concerns about democratic accountability, as AI tools operate without electoral legitimacy yet play a role in guiding political decision-making.

How AI Becomes a Gatekeeper

AI search platforms such as ChatGPT, Gemini, and Perplexity serve as gatekeepers because they filter vast amounts of political content and present it as authoritative summaries. Unlike traditional search engines that provide multiple sources for comparison, AI tools deliver a single synthesized response.

This centralization gives them the power to define what information voters see first and what issues are emphasized or ignored.

Influence on Voter Perceptions

By shaping narratives, AI platforms can influence how voters interpret the credibility and effectiveness of political leaders and parties. If the system highlights achievements, it can reinforce support.

If it amplifies controversies, it can undermine trust. Because many voters treat AI-generated responses as neutral and factual, these outputs can directly shape opinions about who deserves political legitimacy and leadership.

Risks for Democratic Accountability

The role of AI search raises democratic concerns because these systems exercise influence without electoral accountability. Their algorithms, training data, and reinforcement methods determine what voters learn about candidates, yet these processes are largely opaque.

When biased or incomplete information dominates, voters base their decisions on distorted portrayals rather than comprehensive evidence.

Why Oversight Is Necessary

To prevent AI search from disproportionately shaping elections, oversight and transparency are essential. Clear disclosure of sources, mechanisms for correcting misinformation, and balanced inclusion of regional voices can reduce the risk of biased outputs.

Without such safeguards, AI search functions as an unelected gatekeeper that influences voting behavior while remaining outside democratic checks and balances.

Transparency Demands: Should Election Commissions Regulate AI Responses?

The rise of AI search raises questions about transparency and accountability in political communication. Since AI tools generate authoritative summaries that can shape voter perception, there is a growing debate on whether election commissions should play a role in regulating these outputs.

Oversight could involve setting standards for source disclosure, ensuring corrections for misinformation, and requiring balanced representation of parties and policies. Such measures would help prevent bias and protect democratic integrity, while also holding AI platforms accountable for the influence they exert on electoral decision-making.

The Case for Oversight

AI platforms now act as influential sources of political information, yet their methods of content selection and narrative framing remain opaque. This lack of transparency creates risks for democratic fairness, particularly when misinformation, bias, or incomplete data shapes how leaders and parties are represented.

Election commissions, whose mandate includes ensuring free and fair elections, may need to play a role in regulating AI-driven outputs to prevent distortions that influence voter behavior.

Possible Regulatory Measures

Regulation could focus on ensuring source transparency, requiring AI platforms to disclose which materials inform their outputs. Election commissions could also mandate timely corrections when misinformation appears in AI responses.

Additionally, rules could require the balanced representation of parties, policies, and regional voices to ensure that smaller or emerging political actors are not excluded from AI summaries. These measures would not restrict free speech but would establish standards that protect fairness in electoral communication.

Challenges of Implementation

Oversight of AI search poses significant challenges. Election commissions would need technical expertise to evaluate how AI models process data and generate outputs. Global platforms may resist national regulations, citing jurisdictional limitations. Striking a balance between preventing bias and avoiding excessive control is critical, as over-regulation could raise concerns about censorship.

Why Regulation Matters

Without clear accountability, AI platforms can function as unelected influencers in democratic systems. Establishing transparency requirements and ensuring corrective mechanisms helps preserve public trust and strengthen the integrity of electoral processes.

Regulation, when designed with precision and fairness, can reduce risks of bias and ensure that AI search reflects accurate and diverse political perspectives.

Risk of Manipulation: Political Lobbying to Influence AI-Generated Answers

AI search outputs can be vulnerable to manipulation when political actors attempt to influence how leaders, parties, or policies are described. Lobbying efforts may focus on shaping training data, amplifying favorable narratives, or discrediting opponents through coordinated content campaigns.

If successful, these tactics can tilt AI-generated responses toward selective viewpoints, giving one side an unfair advantage in shaping voter perception.

This risk highlights the need for transparency in how AI systems source and rank information, as well as safeguards against undue political pressure on platforms that increasingly act as information gatekeepers.

How Manipulation Can Occur

Political lobbying can attempt to shape AI outputs by influencing the sources and narratives that models depend on.

Tactics may include amplifying favorable content through coordinated media campaigns, publishing large volumes of promotional material, or discrediting opponents with repeated negative framing.

Because AI systems prioritize widely available and frequently cited data, these strategies can push selective viewpoints into AI-generated answers.

Impact on Political Brand Visibility

If manipulation succeeds, AI platforms may present skewed portrayals of leaders or parties that emphasize promotional claims while minimizing criticism. This creates an uneven playing field, where the visibility of political actors depends less on verified achievements and more on their ability to control content ecosystems.

Such distortions can directly influence voter perceptions by framing one party as more credible or effective while delegitimizing opponents.

Risks to Democratic Integrity

Political lobbying aimed at AI platforms raises serious concerns about fairness and accountability. Unlike traditional media, where editorial oversight exists, AI search engines often lack transparent mechanisms to detect and correct manipulated narratives.

The absence of clear disclosure about data sources further increases the risk of voters being misled by biased outputs that appear neutral.

Safeguards Against Manipulation

Reducing this risk requires transparency from AI providers on how responses are generated and which sources carry the most weight. Election commissions and independent watchdogs could establish guidelines that prevent political actors from exerting undue pressure on AI platforms.

Political parties can also counter manipulation by consistently publishing verifiable and structured content, ensuring that AI systems have credible references to draw from.

Future of Political Brand Visibility in AI Ecosystems

The growing influence of AI assistants and personalized search will shape the future of political brand visibility. As voters increasingly rely on AI-driven platforms for quick answers, political identity will be defined less by traditional media and more by how effectively leaders and parties manage their presence within these systems.

Personalized AI interactions will tailor responses based on user behavior, making consistent, structured, and multilingual content essential for accurate representation. Parties that adapt early by investing in AI-focused strategies will not only improve visibility but also shape how political narratives are framed in emerging digital ecosystems.

Rise of Personalized AI Assistants Shaping Political Awareness

The increasing use of personalized AI assistants means that political awareness will become more individualized.

Instead of a single narrative for all voters, AI tools will adjust responses based on user behavior, search history, and regional interests.

This personalization can deepen voter engagement but also raises concerns about fragmented political realities, where different groups receive tailored versions of a leader’s identity or policy positions.

Predictive Search and Guided Voter Sentiment

AI systems are evolving from reactive tools that answer questions to predictive engines that anticipate queries. In a political context, predictive search could influence voter sentiment before campaigns begin by highlighting specific issues or policy areas that are likely to be important to voters.

For example, if unemployment dominates predictive prompts, leaders associated with job creation may gain visibility, while others risk being defined by their absence from that conversation. This shift makes proactive issue framing essential for parties that want to influence voter concerns early in the election cycle.

Next Wave: Agentic AI and Micro-Influence

The next phase of political marketing in AI ecosystems will involve agentic AI, where systems actively interact with users across multiple platforms. These AI agents may guide conversations, recommend content, and shape political awareness at a micro level.

For political campaigns, this creates opportunities for micro-influence, where personalized narratives are delivered through AI chat layers. Leaders and parties that prepare for this shift by building structured, multilingual, and verifiable content will have greater control over how agentic AI tools describe them to voters.

Why Future Readiness Matters

As AI ecosystems expand, political visibility will increasingly depend on adapting to these shifts rather than relying on traditional media or SEO-driven strategies.

Parties that anticipate the role of personalization, predictive guidance, and agentic AI will not only secure stronger visibility but also shape how future voters engage with political identity in digital environments.

Conclusion

Political brand presence in AI search has moved from being a secondary concern to an unavoidable necessity. Unlike traditional search engines, where voters explore multiple sources before forming opinions, AI-driven platforms consolidate vast amounts of information into a single, authoritative response.

This shift means that political leaders and parties cannot rely solely on speeches, rallies, or media coverage to shape their public image. Instead, they must ensure that accurate, structured, and verifiable information about their policies, achievements, and commitments is readily available in the digital ecosystems that AI models draw from.

Without this preparation, opponents, outdated narratives, or misinformation may define how AI systems present their identity to voters.

The strategic lesson is clear: the parties that actively engineer their presence in AI search today will shape tomorrow’s political discourse.

By investing in content engineering, strengthening authority signals, building issue-centric narratives, and ensuring multilingual visibility, political actors can control how they are represented in the emerging AI ecosystem.

Reputation management and proactive monitoring of AI-generated outputs further protect against bias, narrative hijacking, and defamation risks.

As AI assistants and predictive search become integral to voter decision-making, visibility in these platforms will directly influence political outcomes.

The leaders and parties that adapt early will not only capture voter attention but also set the terms of debate in democratic societies that AI increasingly mediates.

Political branding in AI search is no longer optional—it is the new foundation for political legitimacy and voter trust in the digital era.

Political Brand Visibility in AI Search: FAQs

What Does Political Brand Visibility in AI Search Mean?

It refers to how leaders, parties, and policies are represented in AI-powered search platforms that deliver narrative-based answers instead of ranked links.

How Is AI Search Different From Traditional Google Search?

Google presents multiple sources, while AI tools generate direct summaries, which makes them more influential in shaping voter perceptions.

Why Is AI Search Presence Important for Political Communication?

Because AI-driven responses are often treated as authoritative, they influence how voters perceive credibility, leadership, and policy effectiveness.

How Do Large Language Models Summarize Political Figures and Policies?

They analyze recurring themes across training data, such as news reports, manifestos, speeches, and social media, and generate a condensed narrative.

What Risks Does Algorithmic Framing Pose in AI Search?

It can highlight selective issues, amplify controversies, or omit achievements, leading to biased or incomplete portrayals.

What Role Does Training Data and Recency Play in Political Branding?

Training data establishes the baseline narrative, while recent coverage often dominates outputs, meaning temporary controversies can overshadow long-term work.

What Are Authority Signals in AI Search Visibility?

Verified websites, think tank publications, and manifestos that provide structured, credible content recognized by AI platforms.

How Do Semantic Clusters Help Political Branding in AI?

By repeatedly linking a leader or party to issues like education, jobs, or governance, they strengthen associations that AI tools amplify in responses.

What Is Narrative Hijacking in AI Search?

When opponents flood digital spaces with propaganda or criticism, causing AI systems to frame leaders through negative narratives.

What Happens When There Are Information Gaps About Leaders or Policies?

AI tools rely on outdated or incomplete data, which can lead to misrepresentation or diminished visibility.

How Do Defamation Risks Arise in AI Responses?

Unverified claims from news, social media, or commentary can be embedded in AI-generated outputs and presented as factual.

What Is Regional Language Bias in AI Search?

A visibility gap occurs when English content dominates, while regional-language material remains underrepresented in AI outputs.

How Do Engagement Signals Influence AI Search?

Debates, speeches, and viral social media references generate digital traces that AI systems capture and use to frame political identity.

What Strategies Can Strengthen Political Brand Visibility in AI?

Content engineering, issue-centric branding, AI reputation management, and multilingual optimization are key approaches.

How Does Content Engineering Improve AI Visibility?

Publishing structured FAQs, policy briefs, and manifestos in machine-readable formats ensures AI models access accurate and verifiable data.

Why Is Issue-Centric Presence Important for Political Brands?

It ties a leader’s identity to voter priorities, such as jobs or welfare, preventing AI tools from overemphasizing controversies.

What Does AI Reputation Management Involve?

Monitoring how AI tools describe leaders and correcting misinformation through verified content and credible media coverage.

Why Is Multilingual Optimization Necessary?

It ensures representation across both English and regional-language AI queries, reducing visibility gaps in multilingual societies.

How Can Political Lobbying Manipulate AI Search?

By amplifying selective narratives or discrediting opponents through coordinated campaigns, they influence how AI platforms summarize leaders.

What Is the Future of Political Branding in AI Ecosystems?

Personalized assistants, predictive search, and agentic AI will define political identity, making early investment in AI visibility critical for electoral success.